This year’s CHItaly conference took place in beautiful Salerno. It featured extensive workshops and keynote presentations that provided inspiration for continuing work on conversational agents and trust. The theme of the conference was “Technologies and Methodologies of Human-Computer Interaction in the Third Millennium.” Below are snippets from the event, although they do not provide a complete overview, as the conference covered a wide range of topics.

Language: ‘Written word (institutional) represents the real world.’

How LLMs ‘understand’ the written word is tricky, but to get a grip on this, listening to linguistic experts gave a good impression. A concrete example was a project on Gender Sensitivity. The project wants to create a tool that aids in writing more gender sensitive texts with AI. But the research done by Giulia Pedrini on the complexity of annotating and understanding the context behind what is written, shows that for humans there could be many ways of interpreting ‘they/them’ (plural or singular) in regulation or statutes texts, then how can an LLM or GenAI generate the appropriate word. Next to that, the workshop gave an impression on how law has a big impact on how these texts are written in conflict with the constitution where it is forbidden to discriminate yet one has the right to express themselves. Discrimination by only using the masculine ‘he’, in text, against others who do not identify as such, but also, does the law apply to them only? Next to that, LLMs are trained in texts that have these forms in them, so what will come out is the same. Thus, using a model to help with gender sensitive writing will become problematic, and here lies the challenge.

Hallucinations: they could be mitigated by training the model controllingly and structuring the vocabulary between what terms and concepts and labelling them as such. This way, the model will understand the principles of how humans’ reason, and so one can model cultural knowledge.

Other research projects focused on analysing what comes of a GenAI model and comparing them, but it was not clear based on what or more loosely and holistically. This makes the analysis a bit random.

Health and Digital Inclusion

The health sector deals with challenges, including having time to diagnose a patient or going through a huge amount of data to predict a disease. The Hessian.ai initiative looks into these issues. An example is using visual analytics to give more explanations on how a predictive model came to its predictions, and so it would be more possible to understand the model and manipulate it. An example is predicting schizophrenia by using biomarkers and additional data, such as EEG and linguistics, to produce a more reliable prediction. Visual analytics could also aid doctors in visualising the patient’s history in one overview, as the average time a doctor has for a patient is 6-8 minutes. Yet, risks arise when documenting patients’ symptoms in the database, as each patient has their own way of communicating the symptoms. Other examples of the project include using social robots to aid 65+ seniors with extra cognitive load, to keep their cognitive load high so that they keep proacting, and this helps them with dementia. Still, such concepts need vigorous testing and involvement of this group, which I am not sure is happening already.

Project Col-SAI is about the collaboration between humans and symbiotic AI, where symbiotic AI learns from the person interacting with it and vice versa. Here is where the conversational aspect is most prominent, because of the assumed ease of use for seniors and for people with a disability, due to the adaptive and proactive ‘nature’ of such agents or chatbots. While calibrated trust is important, there is a risk of over trusting these tools. The challenges of implementation are well-known and include issues related to regulations and ethics, as a library of dark patterns for chatbots has yet to be established.

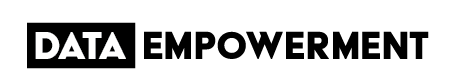

- The workshop on Participatory AI was very interactive and focused on citizen-centric AI, where the starting point is with the citizens rather than with the technology. It emphasised designing with communities. An example is where hospitals often buy a solution without involving the community in the development process. During the workshop, we worked with the Impact vs. Power matrix, mapping stakeholders in a certain sector and their power versus the extent to which they are impacted by the implementation of AI. In our group, we found that when it comes to the public sector, the majority of the stakeholders are in the low power and high impact area. One of the solutions we discussed involved involving teachers; however, teachers themselves are overworked, and putting additional strain on this group that is already under pressure is not ideal. Check out: https://sites.google.com/view/participate-ai/workshop

Co-design with Waves

Nunes’s keynote on day 3 revolved around how to use design to look beyond non-human needs. How to (co)design to discover and listen to things not for us but to the waves, the sea and more. And not to see nature as a resource. With multiple examples of research and design projects exploring the other perspectives. I can’t explain it any better than they do: https://bauhaus-seas.eu/drops/.

Trust in Trust

In the Security and Trust session, Lupetti discussed speculative design and emphasised that biases from the past will reemerge in the future. This highlights the importance of examining our history, rather than solely focusing on the future. Next to that, presentations on evaluations and guidelines on Conversational Agents & Symbiotic AI, explaining that guidelines exist, but the evaluation of these guidelines is missing.

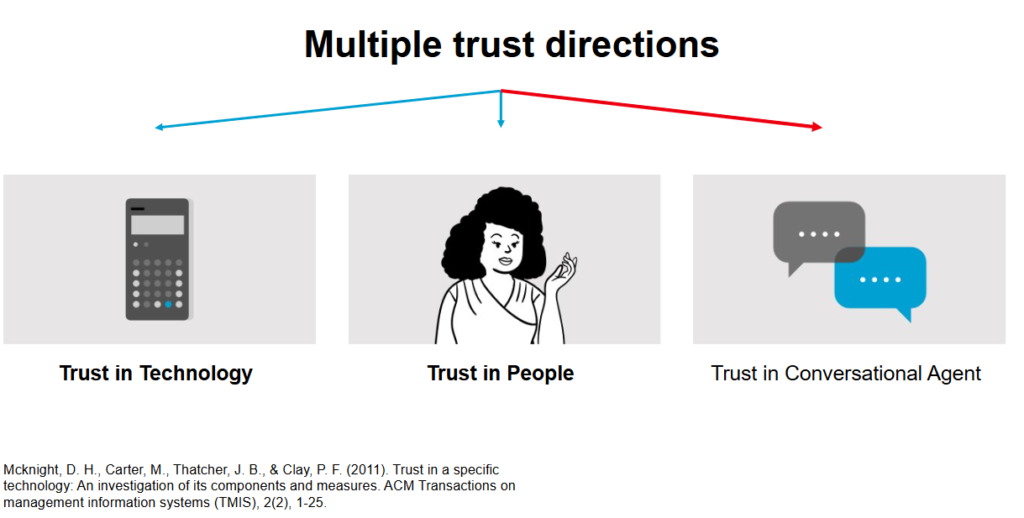

Having worked on a scoping review to get an overview of how trust is being designed for conversational agents together with Roelof de Vries, we presented this paper during this session. Explaining what we see is that trust is being defined, measured and manipulated in many ways, yet the connection between these is not always clear from the papers. We include a suggestion on choosing a path: trust technology, or trust in people, or perhaps a new direction of trust in conversational agents could be considered.

Human-Environment Interaction

The final day concluded with the session Human-Environment Interaction, which was very inspiring. Starting with how technology is often designed without consideration for nature. Here, personas could help, but they are often used for humans; thus, an approach considering Planetary Personas was discussed, saying we might think about making personas not for us.

The project MUSA—Multilayered Urban Sustainability Action looks into how to visualise data in such a way that people feel more connected to their environment. Three guidelines were explained:

- Translating numbers and categorical data into visual cues

- Contextualising geo-tagged data and visual physical cues

- Combine first-person and map perspectives.

Finally, Blanco presented their work on visualising how microplastic fibres are set loose in the air with an interactive tool. It was nice to see how such a design, and in combination with technology, could be visualised in a way that impacted people’s perception of something so invisible yet damaging to our air, ground and everything really. Must see: https://www.alexandrafruhstorfer.com/work/the-secret-life-of/